Author: Lamhot Siagian 🔗 LinkedIn

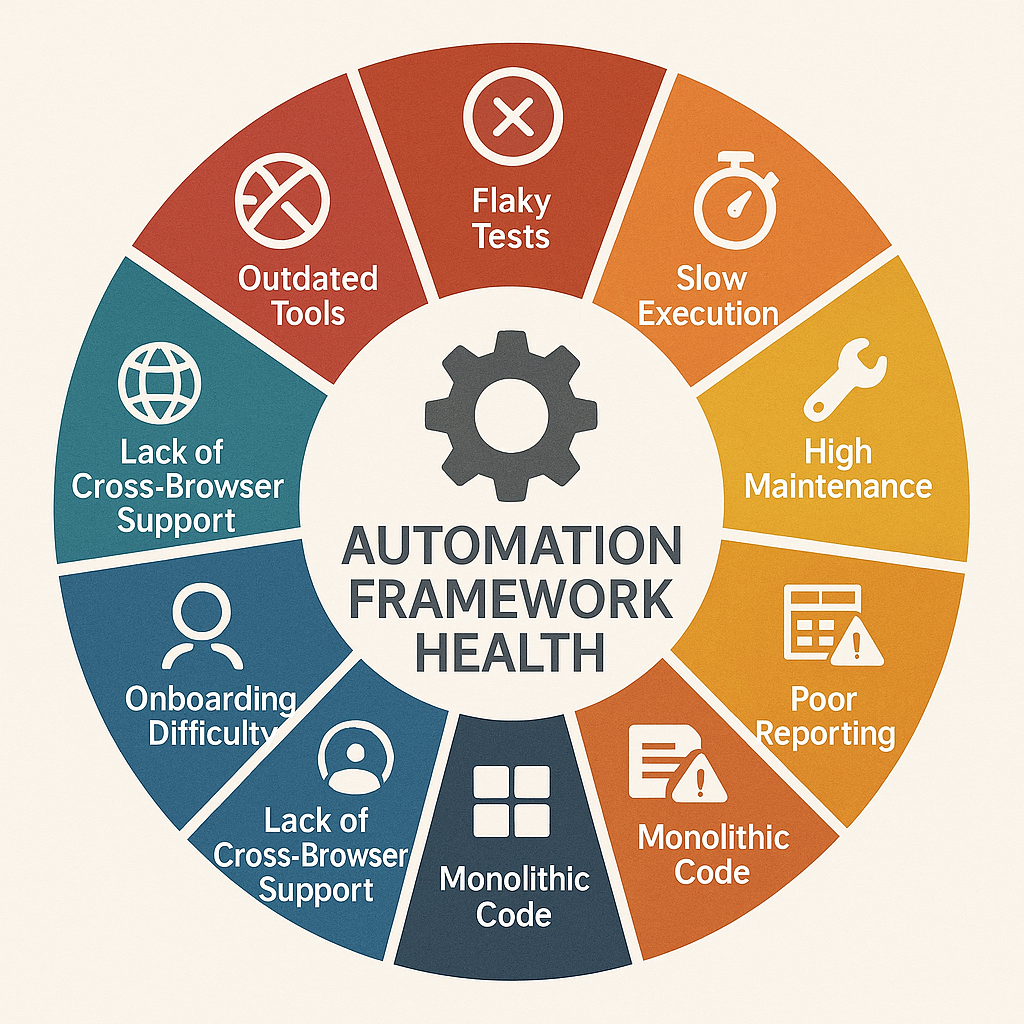

1. 🔄 Frequent “Flaky” Tests

When automated tests randomly pass or fail without any code or application changes, it’s usually a sign that your framework can’t reliably synchronize with dynamic elements (AJAX loads, animations) or external factors (network latency, third-party services). Flakiness erodes team confidence in your suite, leading to constant reruns and hours lost hunting phantom failures.

To fix this, introduce robust waiting strategies—explicit waits, polling loops, and custom retry wrappers—for the patterns you observe. Encapsulate all synchronization logic in reusable helper methods or a base page object so you avoid scattering sleeps everywhere. Where possible, mock or stub unstable dependencies in CI, and tag intermittent tests so you can prioritize stablization over time.

2. 🐢 Long Execution Times

If your regression suite stretches into hours (or days!), you lose the rapid feedback loop developers need. Slow tests often come from end-to-end coverage on every change, lack of parallelization, or unnecessary UI steps that could be tested at the API or unit level.

Shrink your cycle by creating test tiers—run a small “smoke” set on every commit, a medium “integration” set nightly, and the full “regression” suite weekly. Leverage parallel execution (TestNG, JUnit 5, Playwright) to distribute tests across threads or machines. For data-only checks, bypass the UI and call services directly. Finally, use headless browsers and containerized runners to spin up and tear down environments in seconds.

3. 🛠️ High Maintenance Overhead

When every tiny UI tweak breaks scores of tests—or you spend more time updating locators than writing new scenarios—your framework probably lacks proper abstraction. Hard-coded selectors, duplicated helper functions, and scattered utilities lead to brittle, high-maintenance code.

Refactor toward a Page Object (or Screen Object) Model: represent pages and components as classes with well-named methods (loginPage.enterUsername()). Centralize locator definitions and common actions (click, type, wait) into a shared library. Introduce a service or API layer for non-UI validations. Enforce coding standards, peer reviews, and linting for your test code to catch inconsistencies early.

4. 🔒 Hard-Coded Test Data

Embedding usernames, URLs, credentials, or environment flags directly in your test scripts means any data change forces a code update—and often risks leaking secrets into version control. Hard-coded data also limits your coverage, since you can’t easily vary inputs to simulate real-world scenarios.

Adopt a data-driven approach: store test inputs in external files (JSON, YAML, CSV) or a lightweight database, and feed them into your tests via parameterization (@DataProvider in TestNG or Examples in Cucumber). Centralize configuration (URLs, credentials) in a properties file or secure vault and inject at runtime. Use environment variables or CI secrets management to handle sensitive values safely.

5. 📊 Poor or No Reporting

If your test results live only in console logs or obscure XML blobs, diagnosing failures becomes a chore. You lose history, screenshots, logs, and trend data, making it impossible to track flaky patterns or demonstrate ROI to stakeholders.

Integrate a reporting library (Allure, ExtentReports) that generates interactive HTML dashboards with embedded screenshots, logs, and trend charts. Configure your CI server to archive and display these artifacts alongside each build. Add Slack or email notifications summarizing failures with direct links to the detailed report. Over time, you’ll build a reliable test-health dashboard rather than digging through raw logs.

6. 🚫 No CI/CD Integration

Manually kicking off tests after each deployment wastes time and invites human error. Without automated quality gates in your CI/CD pipeline, defects slip into staging or production—and teams lack immediate feedback on breaking changes.

Connect your suite to Jenkins, GitHub Actions, GitLab CI, or Azure DevOps: define pipeline stages for build, unit tests, deploy to test env, run automation, and publish results. Use Docker-based runners to ensure environment consistency. Fail the pipeline on critical test breaks and gate merges or deployments on a green status. This ensures tests run automatically and consistently on every commit or pull request.

7. 🧩 Monolithic & Un-Modular Code

A single massive test script or “everything in one package” design makes maintenance and parallel execution a nightmare. Teams can’t share or reuse code, and CI can’t spin up isolated contexts without conflicts.

Refactor into modules: separate test definitions, page/service objects, utilities, and data providers into distinct packages or libraries. Extract common helpers into a “core” automation library and version it independently. Use semantic versioning to manage updates. Modular code lets each component evolve on its own and supports parallel or distributed execution in isolated containers or JVMs.

8. 📚 Difficulty Onboarding New Engineers

If new hires need weeks to understand how to set up, write, and run tests, your framework is too complex or undocumented. Steep learning curves slow productivity and discourage collaboration.

Invest in clear, up-to-date documentation: a README outlining setup steps, folder structure, naming conventions, and sample tests. Include code templates, quick-start guides, and common troubleshooting tips. Record a short screencast or host a live walkthrough. Encourage new engineers to improve docs and add scenarios as part of their ramp-up tasks.

9. 🌐 Lack of Cross-Browser / Cross-Platform Support

If your suite runs only in Chrome on desktop, you miss bugs on Firefox, Edge, Safari, or mobile devices. Modern users span browsers and form factors; your automation must keep pace or risk shipping UI/UX inconsistencies.

Leverage multi-browser runners (Playwright’s built-in support) or a Selenium Grid (self-hosted or via BrowserStack). Abstract browser setup in a factory class that reads target browser/platform from configuration. For mobile, integrate Appium or use a cloud device lab. Parameterize CI jobs to execute on different browser/OS combos—e.g., Chrome on Windows, Safari on macOS, Chrome on Android—to catch environment-specific issues early.

10. 🕰️ Outdated Tools or Dependencies

Relying on legacy Selenium versions, unsupported drivers, or old CI plugins invites compatibility issues, security vulnerabilities, and missed feature improvements. Technical debt accumulates, making major upgrades painful.

Schedule regular audits and upgrades of your automation stack: subscribe to release notes for Selenium, Playwright, language bindings, and CI plugins. Use dependency-management tools (Maven, Gradle, npm) with a dedicated “dependency check” task. Allocate time each sprint for updates and run your full suite against new versions in an “upgrade” branch. By staying current, you leverage community patches, performance gains, and avoid last-minute large-scale migrations.

Leave a Reply